Transcription

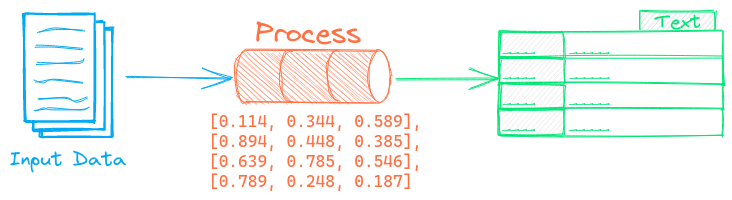

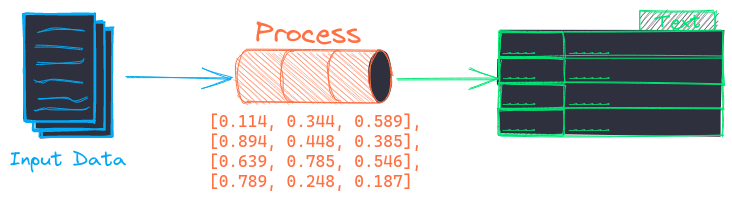

The Transcription pipeline converts speech in audio files to text.

Example

The following shows a simple example using this pipeline.

from txtai.pipeline import Transcription

# Create and run pipeline

transcribe = Transcription()

transcribe("path to wav file")

This pipeline may require additional system dependencies. See this section for more.

See the links below for a more detailed example.

| Notebook | Description | |

|---|---|---|

| Transcribe audio to text | Convert audio files to text | |

| Speech to Speech RAG ▶️ | Full cycle speech to speech workflow with RAG |

Configuration-driven example

Pipelines are run with Python or configuration. Pipelines can be instantiated in configuration using the lower case name of the pipeline. Configuration-driven pipelines are run with workflows or the API.

config.yml

# Create pipeline using lower case class name

transcription:

# Run pipeline with workflow

workflow:

transcribe:

tasks:

- action: transcription

Run with Workflows

from txtai import Application

# Create and run pipeline with workflow

app = Application("config.yml")

list(app.workflow("transcribe", ["path to wav file"]))

Run with API

CONFIG=config.yml uvicorn "txtai.api:app" &

curl \

-X POST "http://localhost:8000/workflow" \

-H "Content-Type: application/json" \

-d '{"name":"transcribe", "elements":["path to wav file"]}'

Methods

Python documentation for the pipeline.

__init__(path=None, quantize=False, gpu=True, model=None, **kwargs)

Source code in txtai/pipeline/audio/transcription.py

25 26 27 28 29 30 31 32 | |

__call__(audio, rate=None, chunk=10, join=True, **kwargs)

Transcribes audio files or data to text.

This method supports a single audio element or a list of audio. If the input is audio, the return type is a string. If text is a list, a list of strings is returned

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

audio

|

audio|list |

required | |

rate

|

sample rate, only required with raw audio data |

None

|

|

chunk

|

process audio in chunk second sized segments |

10

|

|

join

|

if True (default), combine each chunk back together into a single text output. When False, chunks are returned as a list of dicts, each having raw associated audio and sample rate in addition to text |

True

|

|

kwargs

|

generate keyword arguments |

{}

|

Returns:

| Type | Description |

|---|---|

|

list of transcribed text |

Source code in txtai/pipeline/audio/transcription.py

34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 | |