Use Cases

The following sections introduce common txtai use cases. A comprehensive set of over 50 example notebooks and applications are also available.

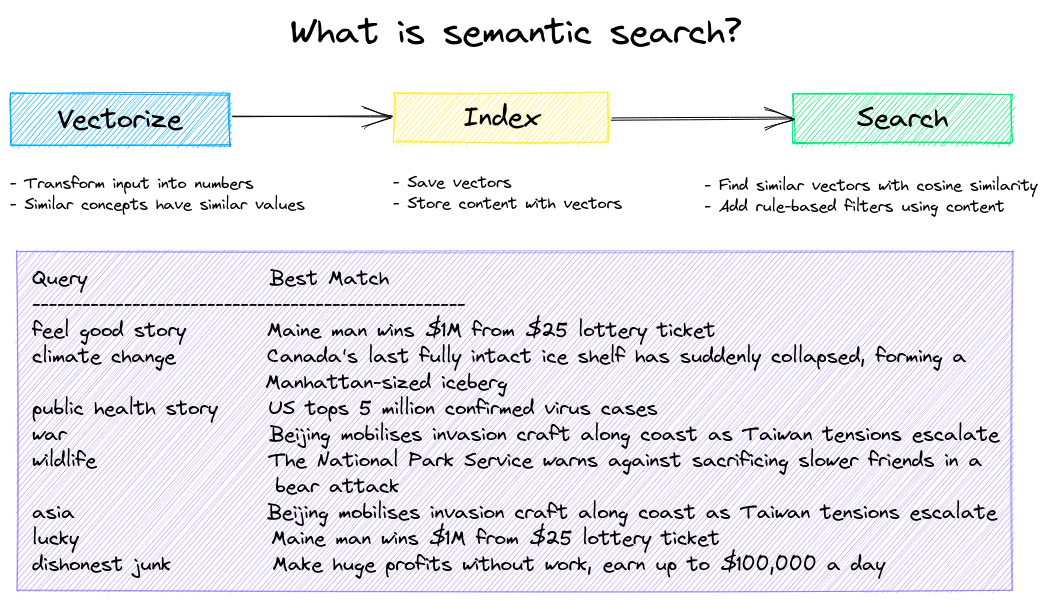

Semantic Search

Build semantic/similarity/vector/neural search applications.

Traditional search systems use keywords to find data. Semantic search has an understanding of natural language and identifies results that have the same meaning, not necessarily the same keywords.

Get started with the following examples.

| Notebook | Description | |

|---|---|---|

| Introducing txtai ▶️ | Overview of the functionality provided by txtai | |

| Similarity search with images | Embed images and text into the same space for search | |

| Build a QA database | Question matching with semantic search | |

| Semantic Graphs | Explore topics, data connectivity and run network analysis |

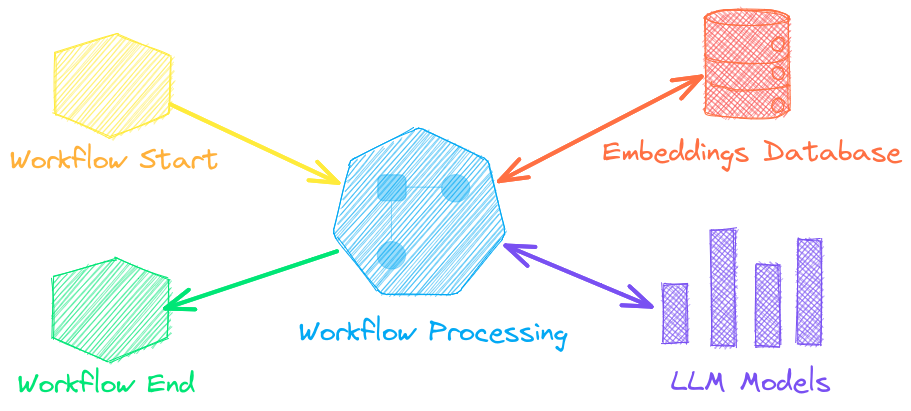

LLM Orchestration

LLM chains, retrieval augmented generation (RAG), chat with your data, pipelines and workflows that interface with large language models (LLMs).

Chains

Integrate LLM chains (known as workflows in txtai), multiple LLM agents and self-critique.

See below to learn more.

| Notebook | Description | |

|---|---|---|

| Prompt templates and task chains | Build model prompts and connect tasks together with workflows | |

| Integrate LLM frameworks | Integrate llama.cpp, LiteLLM and custom generation frameworks | |

| Build knowledge graphs with LLMs | Build knowledge graphs with LLM-driven entity extraction |

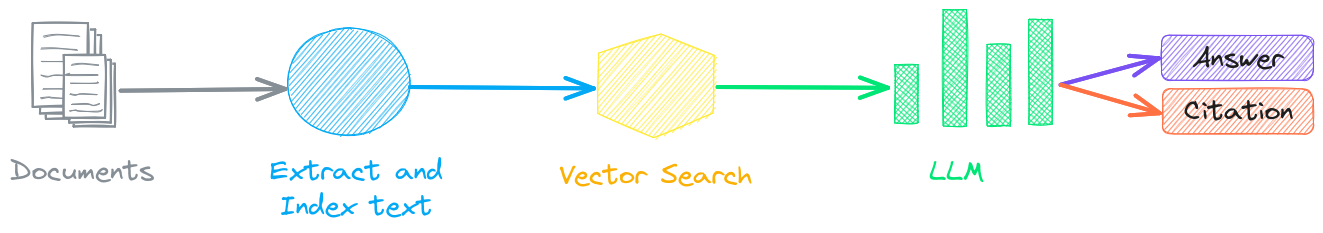

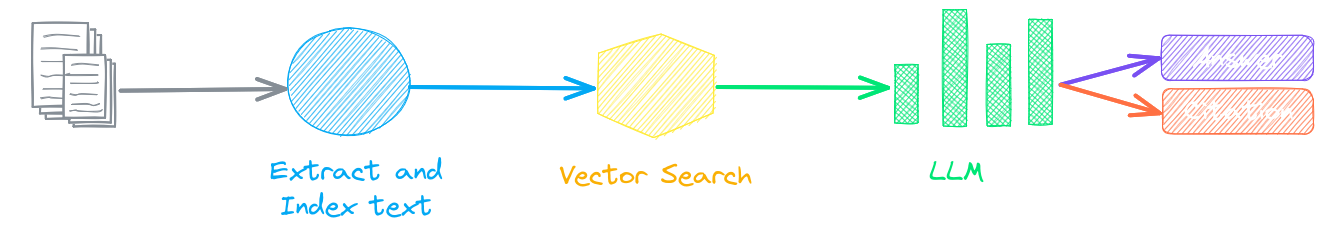

Retrieval augmented generation

Retrieval augmented generation (RAG) reduces the risk of LLM hallucinations by constraining the output with a knowledge base as context. RAG is commonly used to "chat with your data".

A novel feature of txtai is that it can provide both an answer and source citation.

| Notebook | Description | |

|---|---|---|

| Build RAG pipelines with txtai | Guide on retrieval augmented generation including how to create citations | |

| How RAG with txtai works | Create RAG processes, API services and Docker instances | |

| Advanced RAG with graph path traversal | Graph path traversal to collect complex sets of data for advanced RAG | |

| Advanced RAG with guided generation | Retrieval Augmented and Guided Generation |

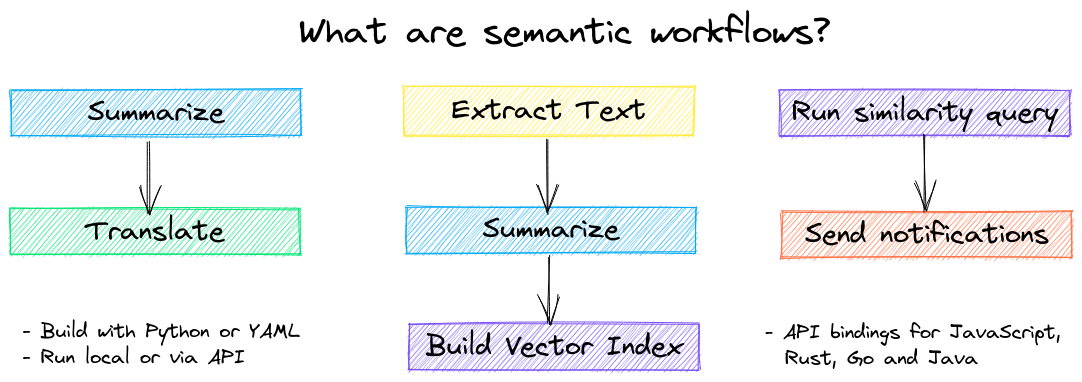

Language Model Workflows

Language model workflows, also known as semantic workflows, connect language models together to build intelligent applications.

While LLMs are powerful, there are plenty of smaller, more specialized models that work better and faster for specific tasks. This includes models for extractive question-answering, automatic summarization, text-to-speech, transcription and translation.

| Notebook | Description | |

|---|---|---|

| Run pipeline workflows ▶️ | Simple yet powerful constructs to efficiently process data | |

| Building abstractive text summaries | Run abstractive text summarization | |

| Transcribe audio to text | Convert audio files to text | |

| Translate text between languages | Streamline machine translation and language detection |