Tabular

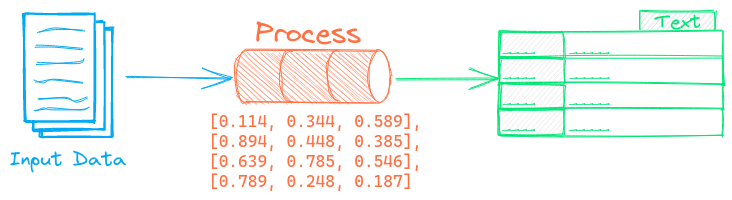

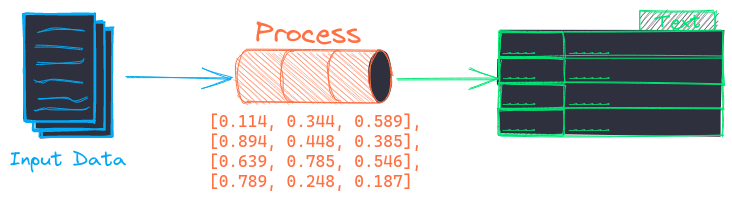

The Tabular pipeline splits tabular data into rows and columns. The tabular pipeline is most useful in creating (id, text, tag) tuples to load into Embedding indexes.

Example

The following shows a simple example using this pipeline.

from txtai.pipeline import Tabular

# Create and run pipeline

tabular = Tabular("id", ["text"])

tabular("path to csv file")

See the link below for a more detailed example.

| Notebook | Description | |

|---|---|---|

| Transform tabular data with composable workflows | Transform, index and search tabular data |

Configuration-driven example

Pipelines are run with Python or configuration. Pipelines can be instantiated in configuration using the lower case name of the pipeline. Configuration-driven pipelines are run with workflows or the API.

config.yml

# Create pipeline using lower case class name

tabular:

idcolumn: id

textcolumns:

- text

# Run pipeline with workflow

workflow:

tabular:

tasks:

- action: tabular

Run with Workflows

from txtai import Application

# Create and run pipeline with workflow

app = Application("config.yml")

list(app.workflow("tabular", ["path to csv file"]))

Run with API

CONFIG=config.yml uvicorn "txtai.api:app" &

curl \

-X POST "http://localhost:8000/workflow" \

-H "Content-Type: application/json" \

-d '{"name":"tabular", "elements":["path to csv file"]}'

Methods

Python documentation for the pipeline.

__init__(idcolumn=None, textcolumns=None, content=False)

Creates a new Tabular pipeline.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

idcolumn

|

column name to use for row id |

None

|

|

textcolumns

|

list of columns to combine as a text field |

None

|

|

content

|

if True, a dict per row is generated with all fields. If content is a list, a subset of fields is included in the generated rows. |

False

|

Source code in txtai/pipeline/data/tabular.py

23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | |

__call__(data)

Splits data into rows and columns.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

data

|

input data |

required |

Returns:

| Type | Description |

|---|---|

|

list of (id, text, tag) |

Source code in txtai/pipeline/data/tabular.py

41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 | |