Textractor

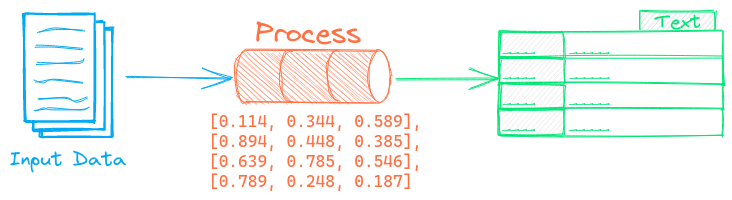

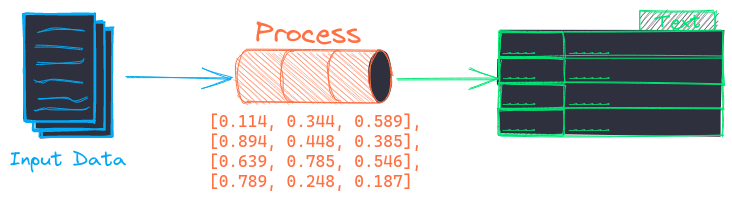

The Textractor pipeline extracts and splits text from documents. This pipeline extends the Segmentation pipeline.

Each document goes through the following process.

- Content is retrieved if it's not local

- If the document

mime-typeisn't plain text or HTML, it's converted to HTML via the FiletoHTML pipeline - HTML is converted to Markdown via the HTMLToMarkdown pipeline

- Content is split/chunked based on the segmentation parameters and returned

The backend parameter sets the FileToHTML pipeline backend. If a backend isn't available, this pipeline assumes input is HTML content and only converts it to Markdown.

See the FiletoHTML and HTMLToMarkdown pipelines to learn more on the dependencies necessary for each of those pipelines.

Example

The following shows a simple example using this pipeline.

from txtai.pipeline import Textractor

# Create and run pipeline

textract = Textractor()

textract("https://github.com/neuml/txtai")

See the link below for a more detailed example.

| Notebook | Description | |

|---|---|---|

| Extract text from documents | Extract text from PDF, Office, HTML and more | |

| Chunking your data for RAG | Extract, chunk and index content for effective retrieval |

Configuration-driven example

Pipelines are run with Python or configuration. Pipelines can be instantiated in configuration using the lower case name of the pipeline. Configuration-driven pipelines are run with workflows or the API.

config.yml

# Create pipeline using lower case class name

textractor:

# Run pipeline with workflow

workflow:

textract:

tasks:

- action: textractor

Run with Workflows

from txtai import Application

# Create and run pipeline with workflow

app = Application("config.yml")

list(app.workflow("textract", ["https://github.com/neuml/txtai"]))

Run with API

CONFIG=config.yml uvicorn "txtai.api:app" &

curl \

-X POST "http://localhost:8000/workflow" \

-H "Content-Type: application/json" \

-d '{"name":"textract", "elements":["https://github.com/neuml/txtai"]}'

Methods

Python documentation for the pipeline.

__init__(sentences=False, lines=False, paragraphs=False, minlength=None, join=False, sections=False, cleantext=True, chunker=None, headers=None, backend='available', **kwargs)

Source code in txtai/pipeline/data/textractor.py

23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 | |

__call__(text)

Segments text into semantic units.

This method supports text as a string or a list. If the input is a string, the return type is text|list. If text is a list, a list of returned, this could be a list of text or a list of lists depending on the tokenization strategy.

Parameters:

| Name | Type | Description | Default |

|---|---|---|---|

text

|

text|list |

required |

Returns:

| Type | Description |

|---|---|

|

segmented text |

Source code in txtai/pipeline/data/segmentation.py

66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 | |